Lecture Notes 9 Asymptotic (Large Sample) Theory 1 Review of o, O, etc.

Lecture Notes 9

Asymptotic (Large Sample) Theory

1

Review of o, O, etc.

1. an = o(1) mean an → 0 as n → ∞.

P

2. A random sequence An is op (1) if An −→ 0 as n → ∞.

P

3. A random sequence An is op (bn ) if An /bn −→ 0 as n → ∞.

√

√

P

4. nb op (1) = op (nb ), so n op (1/ n) = op (1)−→ 0.

5. op (1) × op (1) = op (1).

1. an = O(1) if |an | is bounded by a constant as n → ∞.

2. A random sequence Yn is Op (1) if for every > 0 there exists a constant M such that

limn→∞ P (|Yn | > M ) < as n → ∞.

3. A random sequence Yn is Op (bn ) if Yn /bn is Op (1).

4. If Yn

Y , then Yn is Op (1). (potential test qustion: prove this.)

√

√

5. If n(Yn − c)

Y then Yn = OP (1/ n). (potential test qustion: prove this.)

6. Op (1) × Op (1) = Op (1).

7. op (1) × Op (1) = op (1).

2

Distances Between Probability Distributions

Let P and Q be distributions with densities p and q. We will use the following distances

between P and Q.

1. Total variation distance TV(P, Q) = supA |P (A) − Q(A)|.

R

2. L1 distance d1 (P, Q) = |p − q|.

qR

√

√

( p − q)2 .

3. Hellinger distance h(P, Q) =

4. Kullback-Leibler distance K(P, Q) =

R

5. L2 distance d2 (P, Q) = (p − q)2 .

R

p log(p/q).

Here are some properties of these distances:

1. TV(P, Q) = 12 d1 (P, Q). (prove this!)

1

2. h2 (P, Q) = 2(1 −

R√

pq).

p

3. TV(P, Q) ≤ h(P, Q) ≤ 2TV(P, Q).

4. h2 (P, Q) ≤ K(P, Q).

p

5. TV(P, Q) ≤ h(P, Q) ≤ K(P, Q).

p

6. TV(P, Q) ≤ K(P, Q)/2.

3

Consistency

θbn = T (X n ) is consistent for θ if

P

θbn −→ θ

as n → ∞. In other words, θbn − θ = op (1). Here are two common ways to prove that θbn

consistent.

Method 1: Show that, for all ε > 0,

P(|θbn − θ| ≥ ε) −→ 0.

Method 2. Prove convergence in quadratic mean:

MSE(θbn ) = Bias2 (θbn ) + Var(θbn ) −→ 0.

qm

p

If bias → 0 and var → 0 then θbn → θ which implies that θbn → θ.

P

Example 1 Bernoulli(p). The mle pb has bias 0 and variance p(1 − p)/n → 0. So pb−→ p

and is consistent. Now let ψ = log(p/(1−p)). Then ψb = log(b

p/(1− pb)). Now ψb = g(b

p) where

P

b

g(p) = log(p/(1 − p)). By the continuous mapping theorem, ψ −→ ψ so this is consistent.

Now consider

X +1

pb =

.

n+1

Then

p−1

Bias = E(b

p) − p = −

→0

n(1 + n)

and

Var =

p(1 − p)

→ 0.

n

So this is consistent.

2

Example 2 X1 , . . . , Xn ∼ Uniform(0, θ). Let θbn = X(n) . By direct proof (we did it earlier)

P

we have θbn −→ θ.

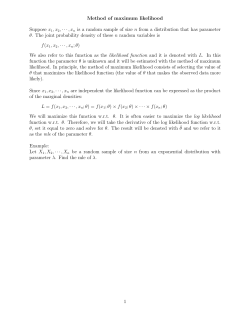

Method of moments estimators

typically consistent. Consider one parameter. Recall

Pare

n

−1

−1

b

exists and is continuous. So

that µ(θ) = m where m = n

i=1 Xi . Assume that µ

P

−1

b

θ = µ (m). By the WLLN m−→ µ(θ). So, by the continuous mapping Theorem,

P

θbn = µ−1 (m)−→ µ−1 (µ(θ)) = θ.

4

Consistency of the MLE

Under regularity conditions, the mle is consistent. Let us prove this in a special case. This

will also reveal a connection between the mle and Hellinger distance. Suppose that the model

consists of finitely many distinct densities {p0 , p1 , . . . , pN }. The likelihood function is

L(pj ) =

n

Y

pj (Xi ).

i=1

The mle pb is the density pj that maximizes L(pj ). Without loss of generality, assume that

the true density is p0 .

Theorem 3

P(b

p 6= p0 ) → 0

as n → ∞.

Proof. Let us begin by first proving an inequality. Let j = h(p0 , pj ). Then, for j 6= 0,

s

!

!

n

n

Y

Y

L(pj )

p

(X

)

p

(X

)

2

4

2

j

i

j

i

P

> e−nj /2 = P

> e−nj /2

= P

> e−nj /2

L(p0 )

p

(X

)

p

(X

)

i

0

i

i=1 0

i=1

s

s

!

!

n

n

Y

Y

p

(X

)

p

(X

)

2

2

j

i

j

i

≤ enj /4 E

= enj /4

E

p

(X

)

p

(X

0

i

0

i)

i=1

i=1

Z

n

n

n

2j

h2 (p0 , pj )

√

n2j /4

n2j /4

n2j /4

= e

pj p0

=e

1−

=e

1−

2

2

2 j

2

2

2

2

≤ enj /4 e−nj /2 = e−nj /2 .

= enj /4 exp n log 1 −

2

3

R√

We used the fact that h2 (p0 , pj ) = 2 − 2

p0 pj and also that log(1 − x) ≤ −x for x > 0.

Let = min{1 , . . . , N }. Then

L(pj )

−n2j /2

>e

for some j

P(b

p 6= p0 ) ≤ P

L(p0 )

N

X

L(pj )

−n2j /2

≤

>e

P

L(p0 )

j=1

≤

N

X

2

e−nj /2 ≤ N e−n

2 /2

→ 0.

j=1

We can prove a similar result using Kullback-Leibler distance as follows. Let X1 , X2 , . . .

be iid Fθ . Let θ0 be the true value of θ and let θ be some other value. We will show that

L(θ0 )/L(θ) > 1 with probability tending to 1. We assume that the model is identifiable;

this means that θ1 6= θ2 implies that K(θ1 , θ2 ) > 0 where K is the Kullback-Leibler distance.

Theorem 4 Suppose the model is identifiable. Let θ0 be the true value of the parameter.

For any θ 6= θ0

L(θ0 )

>1 →1

P

L(θ)

as n → ∞.

Proof. We have

1

(`(θ0 ) − `(θ)) =

n

n

n

1X

1X

log p(Xi ; θ0 ) −

log p(Xi ; θ)

n i=1

n i=1

p

→ E(log p(X; θ0 )) − E(log p(X; θ))

Z

Z

=

(log p(x; θ0 ))p(x; θ0 )dx − (log p(x; θ))p(x; θ0 )dx

Z p(x; θ0 )

=

p(x; θ0 )dx

log

p(x; θ)

= K(θ0 , θ) > 0.

So

P

L(θ0 )

>1

= P (`(θ0 ) − `(θ) > 0)

L(θ)

1

= P

(`(θ0 ) − `(θ)) > 0 → 1. n

This is not quite enough to show that θbn → θ0 .

4

Example 5 Inconsistency of an mle. In all examples so far n → ∞, but the number of

parameters is fixed. What if the number of parameters also goes to ∞? Let

Y11 , Y12

Y21 , Y22

..

.

Yn1 , Yn2

Some calculations show that

∼ N (µ1 , σ 2 )

∼ N (µ2 , σ 2 )

.

∼ ..

∼ N (µn , σ 2 ).

n X

2

X

(Yij − Y i )2

σ

b =

.

2n

i=1 j=1

2

It is easy to show (good test question) that

p

σ

b2 −→

σ2

.

2

Note that the modified estimator 2b

σ 2 is consistent.

The reason why consistency fails is because the dimension of the parameter space is

increasing with n.

Theorem 6 Under regularity conditions on the model {p(x; θ) : θ ∈ Θ}, the mle is consistent.

5

Score and Fisher Information

The score and Fisher information are the key quantities in many aspects of statistical inference. (See Section 7.3.2 of CB.) Suppose for now that θ ∈ R.

• L(θ) = p(xn ; θ)

• `(θ) = log L(θ)

• S(θ) =

∂

∂θ

`(θ) ← score function.

Recall that the value θb that maximizes L(θ) is the maximum likelihood estimator

(mle). Equivalently, θb maximizes `(θ). Note that θb = T (X1 , . . . , Xn ) is a function of the

data. Often, we get θb by differentiation. In that case θb solves

b = 0.

S(θ)

We’ll discuss the mle in detail later.

5

Some Notation: Recall that

Z

Eθ (g(X)) ≡

g(x)p(x; θ)dx.

Theorem 7 Under regularity conditions,

Eθ [S(θ)] = 0.

In other words,

Z

Z ···

∂ log p(x1 , . . . , xn ; θ)

∂θ

p(x1 , . . . , xn ; θ)dx1 . . . dxn = 0.

That is, if the expected value is taken at the same θ as we evaluate S, then the expectation

is 0. This does not hold when the θ’s mismatch: Eθ0 [S(θ1 )] 6= 0.

Proof.

∂ log p(xn ; θ)

p(xn ; θ) dx1 · · · dxn

∂θ

Z

Z ∂

p(xn ; θ)

∂θ

=

···

p(xn ; θ) dx1 · · · dxn

n

p(x ; θ)

Z

Z

∂

· · · p(xn ; θ) dx1 · · · dxn

=

∂θ

|

{z

}

Z

Eθ [S(θ)] =

Z

···

1

= 0.

Example 8 Let X1 , . . . , Xn ∼ N (θ, 1). Then

S(θ) =

n

X

(Xi − θ).

i=1

Warning: If the support of f depends on θ, then

R

···

R

and

∂

∂θ

cannot be switched.

The next quantity of interest is the Fisher Information or Expected Information.

The information is used to calculate the variance of quantities that arise in inference problems

b It is called information because it tells how much information is in the

such as the mle θ.

6

likelihood about θ. The definition is:

I(θ) = Eθ [S(θ)2 ]

2

= Eθ [S(θ)2 ] − Eθ [S(θ)]

= Varθ (S(θ))

since Eθ [S(θ)] = 0

2

∂

= Eθ − 2 `(θ) ← easiest way to calculate

∂θ

We will prove the final equality under regularity conditions shortly. I(θ) grows linearly in

n, so for an iid sample, a more careful notation would be In (θ)

" n

#

X ∂2

∂2

In (θ) = E − 2 l(θ) = E −

log p(Xi ; θ)

2

∂θ

∂θ

i=1

2

∂

log p(X1 ; θ) = nI1 (θ).

= −nE

∂θ2

Note that the Fisher information is a function of θ in two places:

• The derivate is w.r.t. θ and the information is evaluated at a particular value of θ.

• The expectation is w.r.t. θ also. The notation only allows for a single value of θ because

the two quantities should match.

A related quantity of interest is the observed information, defined as

n

X ∂2

∂2

Ibn (θ) = − 2 `(θ) = −

log p(Xi ; θ).

2

∂θ

∂θ

i=1

P

By the LLN n1 Ibn (θ)−→ I1 (θ). So observed information can be used as a good approximation

to the Fisher information.

h 2

i

−∂

2

Let us prove the identity: Eθ [S(θ) ] = Eθ ∂θ2 `(θ) . For simplicity take n = 1. First

note that

00 Z

Z

Z

Z 00

p

p

0

00

p=1 ⇒

p =0 ⇒

p =0 ⇒

p=0 ⇒ E

= 0.

p

p

Let ` = log p and S = `0 = p0 /p. Then `00 = (p00 /p) − (p0 /p)2 and

0 2

p

2

2

2

V (S) = E(S ) − (E(S)) = E(S ) = E

p

0 2

00 p

p

= E

−E

p

p

00 0 2 !

p

p

−

= −E

p

p

= −E(`00 ). 7

b ≈ 1/In (θ).

Why is I(θ) called “Information”? Later we will see that Var(θ)

The Vector Case. Let θ = (θ1 , · · · , θK ). L(θ) and `(θ) are defined as before.

h

i

∂`(θ)

• S(θ) = ∂θi

a vector of dimension K

i=1,··· ,K

• Information I(θ) = Var[S(θ)] is the variance-covariance matrix of

S(θ) = [Iij ]ij=1,··· ,k

where

Iij = −Eθ

∂ 2 `(θ)

.

∂θi ∂θj

b (This is the inverse of the matrix, evaluated at

• I(θ)−1 is the asymptotic variance of θ.

the proper component of the matrix.)

Example 9

X1 , · · · , Xn ∼ N (µ, γ)

n

Y

−n

−1

−1

1

2

2

√

(xi − µ) ∝ γ 2 exp

Σ(xi − µ)

exp

L(µ, γ) =

2γ

2γ

2πγ

i=1

n

1

`(µ, γ) = K − log γ − Σ(xi − µ)2

2

2γ

1

Σ(xi − µ)

γ

S(µ, γ) =

n

+ 2γ12 Σ(xi − µ)2

− 2γ

−n

−1

Σ(xi − µ)

γ

γ2

I(µ, γ) = −E −1

Σ(xi − µ) 2γn2 − γ13 Σ(xi − µ)2

γ2

n

0

γ

=

0 2γn2

You can check that Eθ (S) = (0, 0)T .

6

Efficiency and Asymptotic Normality

√

If n(θbn − θ)

N (0, v 2 ) then we call v 2 the asymptotic variance of θbn . This is not the same

as the limit of the variance which is limn→∞ nVar(θbn ).

Consider X n . In this case, the asymptotic variance is σ 2 . We also have that limn→∞ nVar(X n ) =

2

σ . In this case, they are the same. In general, the latter may be larger (or even infinite).

8

Example 10 (Example 10.1.10) Suppose we observe Yn ∼ N (0, 1) with probability pn and

Yn ∼ N (0, σn2 ) with probability 1 − pn . We can write this as a hierachical model:

Wn ∼ Bernoulli(pn )

Yn |Wn ∼ N (0, Wn + (1 − Wn )σn2 ).

Now,

Var(Yn ) = VarE(Yn |Wn ) + EVar(Yn |Wn )

= Var(0) + E(Wn + (1 − Wn )σn2 ) = pn + (1 − pn )σn2 .

Suppose that pn → 1, σn → ∞ and that (1 − pn )σn2 → ∞. Then Var(Yn ) → ∞. Then

P(Yn ≤ a) = pn P(Z ≤ a) + (1 − pn )P(Z ≤ a/σn ) → P(Z ≤ a)

and so Yn

N (0, 1). So the asymptotic variance is 1.

Suppose we want to estimate τ (θ). Let

v(θ) =

where

I(θ) = Var

∂

log p(X; θ)

∂θ

|τ 0 (θ)|2

I(θ)

= −Eθ

∂2

log p(X; θ) .

∂θ2

We call v(θ) the Cramer-Rao lower bound. Generally, any well-behaved estimator

will

√

have a limiting variance bigger than or equal to v(θ). We say that Wn is efficient if n(Wn −

τ (θ))

N (0, v(θ)).

Theorem 11 Let X1 , X2 , . . . , be iid. Assume that the model satisfies the regularity conditions in 10.6.2. Let θb be the mle. Then

√

b − τ (θ))

n(τ (θ)

N (0, v(θ)).

b is consistent and efficient.

So τ (θ)

We will now prove the asymptotic normality of the mle.

Theorem 12

√

1

N 0,

.

I(θ)

n(θbn − θ)

Hence,

θbn = θ + OP

9

1

√

n

.

Proof. By Taylor’s theorem

b = `0 (θ) + (θb − θ)`00 (θ) + · · · .

0 = `0 (θ)

Hence

√

n(θb − θ) ≈

Now

√1 `0 (θ)

n

− n1 `00 (θ)

=

A

.

B

n

√

√

1

1X

A = √ `0 (θ) = n ×

S(θ, Xi ) = n(S − 0)

n i=1

n

where S(θ, Xi ) is the score function based on Xi . Recall that

p E(S(θ, Xi )) = 0 and Var(S(θ, Xi )) =

I(θ). By the central limit theorem, A

N (0, I(θ)) = I(θ)Z where Z ∼ N (0, 1). By the

WLLN,

P

B −→ − E(`00 ) = I(θ).

By Slutsky’s theorem

p

I(θ)Z

1

Z

= N 0,

=p

.

I(θ)

I(θ)

I(θ)

A

B

So

√

n(θb − θ)

1

N 0,

. I(θ)

Theorem 11 follows by the delta method:

√

n(θbn − θ)

N (0, 1/I(θ))

implies that

√

N (0, (τ 0 (θ))2 /I(θ)).

n(τ (θbn ) − τ (θ))

The standard error of θb is

s

se =

s

1

=

nI(θ)

The estimated standard error is

s

se

b =

1

b

In (θ)

1

.

In (θ)

.

b is

The standard error of τb = τ (θ)

s

se =

|τ 0 (θ)|

=

nI(θ)

10

s

|τ 0 (θ)|

.

In (θ)

The estimated standard error is

s

se

b =

b

|τ 0 (θ)|

.

b

In (θ)

Example 13 X1 , · · · , Xn iid Exponential (θ). Let t = x. So: p(z; θ) = θe−θx , L(θ) =

, I(θ) =

e−nθt+n ln θ , l(θ) = −nθt + n ln θ, S(θ) = nθ − nt ⇒ θb = 1t = X1 , l00 (θ) = −n

θ2

2

E[−l00 (θ)] = n2 , θb ≈ N θ, θ .

θ

n

Example 14 X1 , . . . , Xn ∼ Bernoulli(p). The mle is pb = X. The Fisher information for

n = 1 is

1

.

I(p) =

p(1 − p)

So

√

n(b

p − p)

N (0, p(1 − p)).

Informally,

p(1 − p)

pb ≈ N p,

.

n

The asymptotic variance is p(1 − p)/n. This can be estimated by pb(1 − pb)/n. That is, the

estimated standard error of the mle is

r

pb(1 − pb)

se

b =

.

n

Now suppose we want to estimate τ = p/(1 − p). The mle is τb = pb/(1 − pb). Now

1

∂ p

=

∂p 1 − p

(1 − p)2

The estimated standard error is

r

se(b

b τ) =

7

1

pb(1 − pb)

×

=

n

(1 − pb)2

s

pb

.

n(1 − pb)3

Relative Efficiency

If

√

n(Wn − τ (θ))

√

n(Vn − τ (θ))

2

N (0, σW

)

2

N (0, σV )

then the asymptotic relative efficiency (ARE) is

ARE(Vn , Wn ) =

11

2

σW

.

σV2

Example 15 (10.1.17). Let X1 , . . . , Xn ∼ Poisson(λ). The mle of λ is X. Let

τ = P(Xi = 0).

So τ = e−λ . Define Yi = I(Xi = 0). This suggests the estimator

n

Wn =

1X

Yi .

n i=1

Another estimator is the mle

Vn = e−λ .

b

The delta method gives

Var(Vn ) ≈

λe−2λ

.

n

We have

√

n(Wn − τ )

√

n(Vn − τ )

N (0, e−λ (1 − e−λ ))

N (0, λe−2λ ).

So

ARE(Wn , Vn ) =

eλ

λ

≤ 1.

−1

Since the mle is efficient, we know that, in general, ARE(Wn , mle) ≤ 1.

8

Robustness

The mle is efficient only if the model is right. The mle can be bad if the model is wrong.

That is why we should consider using nonparametric methods. One can also replace the mle

with estimators that are more robust.

Suppose we assume that X1 , . . . , Xn ∼ N (θ, σ 2 ). The mle is θbn = X n . Suppose, however

that we have a perturbed model Xi is N (θ, σ 2 ) with probability 1 − δ and Xi is Cauchy with

probability δ. Then, Var(X n ) = ∞.

Consider the median Mn . We will show that

ARE(median, mle) = .64.

But, under the perturbed model the median still performs well while the mle is terrible. In

other words, we can trade efficiency for robustness. Let us now find the limiting distribution

of Mn .

√

Let Yi = I(Xi ≤ µ + a/ n). Then Yi ∼ Bernoulli(pn ) where

√

a

1

a

pn = P (µ + a/ n) = P (µ) + √ p(µ) + o(n−1/2 ) = + √ p(µ) + o(n−1/2 ).

2

n

n

12

Also,

P

i

Yi has mean npn and standard deviation

p

σn = npn (1 − pn ).

Note that,

a

Mn ≤ µ + √

n

if and only if

X

Yi ≥

i

n+1

.

2

Then,

!

X

√

n+1

a

=P

P( n(Mn − µ) ≤ a) = P Mn ≤ µ + √

Yi ≥

2

n

i

P

n+1

− npn

i Yi − npn

≥ 2

.

= P

σn

σn

Now,

n+1

2

− npn

→ −2ap(µ)

σn

and hence

√

Z

Z

P( n(Mn − µ) ≤ a) → P(Z ≥ −2ap(µ)) = P −

≤a =P

≤a

2p(µ)

2p(µ)

so that

√

n(Mn − µ)

N

For a standard Normal, (2p(0))2 = .64.

13

1

0,

(2p(µ))2

.

© Copyright 2025