Ultra-miniature omni-directional camera for an autonomous flying micro-robot

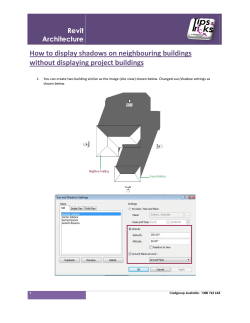

Ultra-miniature omni-directional camera for an autonomous flying micro-robot Pascal Ferrat, Christiane Gimkiewicz, Simon Neukom, Yingyun Zha, Alain Brenzikofer, Thomas Baechler CSEM Centre Suisse d’Electronique et Microtechnique SA, Zurich Center, Technoparkstrasse 1, CH-8005 Zurich, Switzerland, Phone +41-44-497 14 00, www.csem.ch, imaging@csem.ch ABSTRACT CSEM presents a highly integrated ultra-miniature camera module with omni-directional view dedicated to autonomous micro flying devices. Very tight design and integration requirements (related to size, weight, and power consumption) for the optical, microelectronic and electronic components are fulfilled. The presented ultra-miniature camera platform is based on two major components: a catadioptric lens system and a dedicated image sensor. The optical system consists of a hyperbolic mirror and an imaging lens. The vertical field of view is +10° to -35°.The CMOS image sensor provides a polar pixel field with 128 (horizontal) by 64 (vertical) pixels. Since the number of pixels for each circle is constant, the unwrapped panoramic image achieves a constant resolution in polar direction for all image regions. The whole camera module, delivering 40 frames per second, contains optical image preprocessing for effortless re-mapping of the acquired image into undistorted cylindrical coordinates.The total weight of the complete camera is less than 5 g. The system‟s outer dimensions are 14.4 mm in height, with a 11.4 mm x 11.4 mm foot print. Thanks to the innovative PROGLOG™, a dynamic range of over 140 dB is achieved. Keywords: omni-directional camera, muFly, PROGLOG™, catadioptric lens system, polar pixel field, CMOS imager 1. INTRODUCTION A photograph of the presented camera module is shown in Figure 1. It combines omni-directionality with a polar pixel field and a high dynamic range in a very compact design. Circular pixel field layouts became prominent in the area of foveated image sensors ([2], [3]). Foveation means that the pixel resolution is higher in the center of the sensor than close to the outer border, in order to simulate the human retina. In the pixel field of the presented camera module, however, the height of the pixels is constant. Thus the panoramic image can be remapped to Cartesian coordinates by the simple transformation r y, α x as shown in Figure 2. Apart from this effortless remapping, the main advantage of the camera module presented here over the one published by Yasushi et. al ([5]) is its size of about 2 cm3. A high dynamic range is achieved by an innovative pixel design patented by CSEM ([4]). Figure 1 Picture of the presented camera module Figure 2 Remapping of the polar pixel field to an image in Cartesian coordinates The presented camera module is designed as part of the muFly [1] project which started in June 2006 and is funded by the European Commission (EC Contract No. FP6-IST-034120). The goal of this project is to design a fully autonomous micro-helicopter, weighting less than 50 g. During a flight operation, the helicopter has to navigate on its own without any remote control. The autonomous navigation includes orientation, following a predetermined route as well as obstacle avoidance. The motivation for developing a fully autonomous micro-helicopter is that it can be used to collect data from dangerous premises like collapsed buildings. For such missions, the helicopter could be equipped e.g. with a standard high-resolution camera or other sensors for acquiring the desired data. Today‟s miniaturized camera designs are driven by the cell-phone market which requires very small and low-cost camera modules that can be integrated directly in the handsets. However, these cameras are optimized for the cell-phone application and are by no means optimized for the stringent requirements of a micro-robot, i.e. with respect to: Omni-directionality High frame rate Large field of view High dynamic range for reliable indoor and outdoor operations High sensitivity for reliable operation under low-light conditions Optical pre-processing for effortless remapping of the acquired image into undistorted cylindrical coordinates These requirements lead to the target specification of the camera module for the muFly project listed in Table 1. Table 1 Target specifications of the camera module Subject Requirement Resolution of pixel field 64 x 128 pixels Vertical field of view -35° to +10° Precision of image sensor outputs 10 bits Frame rate up to 40 frames/s, programmable Dynamic range > 140 dB Weight < 5g Physical dimensions ≈ 2 cm3 Power consumption < 1 mW A high dynamic range is an important requirement to allow the use of the camera in both indoor and outdoor applications. Depending on the illumination conditions, there can be very bright and very dim areas in one picture. If only the programmable exposure time can be used to adjust the brightness of a picture, either the bright areas are overexposed or the dim areas are under-exposed. To circumvent this problem, CSEM‟s PROGLOG™ technology is used on the image sensor. Below a certain amount of light, the response of the photodiode is linear whereas above this threshold, the photodiode response is logarithmic. In other words, areas that are brighter than a certain threshold are compressed logarithmically thereby avoiding over-exposure (see section 2). In section 2 the image sensor and the PROGLOG™ pixel implemented on it are discussed in more detail. The complete camera module is described in section 3. After the discussion of two possible applications of this camera module in section 4, the paper is concluded in section 5. 2. IMAGE SENSOR 2.1 PROGLOG™ pixels for high dynamic range In order to achieve an effortless remapping of the acquired image into undistorted cylindrical coordinates, a polar pixel field layout is employed. The pixel field is divided into 64 concentric circles (rows) and 128 radials (columns). The height of all the pixels is 30 μm. The width of the pixels increases proportionally with the radius leaving virtually no gaps between adjacent pixels on a circle (see Figure 3). In this layout, the photodiode of one pixel is marked with FD and the sense node with SN (corresponding to the notation in Figure 4). The five transistors controlling the pixel are packed in the square named 5T. Figure 3 Zoom into the layout of the pixel field The spatially variant pixel pitch in the polar-radial geometry is compensating the non-uniform resolution properties of the central catadioptric lens system, resulting in a cylindrical re-projection of the image. Since no computational power is available on-chip to correct for vignetting and sensitivity variations, the effective pixel size is adapted accordingly, so that all pixels have the same specific response to incident radiation, despite their varying size. This is achieved by designing pixels with different size and shape but keeping an identical ratio of effective capacitance to geometrical fill factor (fF/um2). In order to achieve highest possible dynamic range the PROGLOG™ technology is used in the pixels (see Figure 4). Figure 4 Schematic drawing of a conventional 5T-pixel (a) and of a 5T-pixel employing the PROGLOG™ technology (b) In principle the PROGLOG™ pixel is a 5-transistor-pixel (5T-pixel) with a global reset gl_rs and a global shutter gl_sh and an additional diode connected to the photodiode. The basic working principle of a 5T-pixel shown in the left schematic is as follows: At the beginning of an exposure period all the floating diffusions (FD) are reset to the supply voltage VDD by asserting the signal gl_rs. As soon as the global reset is deasserted, the photodiode starts to discharge the node FD with a rate linearly proportional to the power of the exposing light. Since the photodiode is discharging the node FD, a low voltage on this node is caused by a lot of light whereas a high voltage on the node FD is the result of very little light. And since the node FD acts as a capacitor, the voltage on this node is directly proportional to the accumulated charge. By asserting the global shutter, the accumulated charge is shared between the floating diffusion FD and the sense node SN. After the global shutter is re-opened, the voltage on the sense node can be read out through the source follower circuit by asserting the row select signal row_sel. The row reset signal row_res is used to subtract the reset value from the signal value in the double-sampling stage (DSS). This results in the cancellation of the reset noise. Because all operations inside the conventional 5T-pixel are linear, the value read-out from the sense node is linearly proportional to the energy of the exposing light. By employing the additional diode in the PROGLOG™-pixel, the response of the pixel becomes linear-logarithmic. As long as the voltage on the floating diffusion FD stays above Vlog Vth , where Vth is the threshold voltage of the diode, the diode does not conduct and therefore leaves the charge accumulated on the floating diffusion unaffected. As soon as the voltage on the node FD drops below this limit, the diode starts to conduct, compensating for some of the photocurrent generated by the photodiode. Because the current-voltagecharacteristic of a diode is exponential, the voltage on the node FD is decreasing logarithmically in this case and not linearly anymore. Although the energy of the exposing light increases linearly, the voltage on the floating diffusion drops logarithmically, achieving a strong (logarithmic) compression of the light energy. Figure 5 shows the response of the photodiode for various settings of the control voltage Vlog. The light power on the abscissa is drawn in logarithmic scale. Therefore, the photodiode response increases exponentially in the linear region (low illumination). Figure 5 Linear-logarithmic response of the photodiode for various settings of the control voltage Vlog The effect of the logarithmic compression of high input light power is illustrated in Figure 6. The images show a window with a black panel left of the center of the image and two bright light sources at the right. Comparing the two images, one can clearly see the areas that are over-exposed by sunlight and the bright light sources when the PROGLOG™ functionality is switched off. Especially the black panel in the window is distorted a lot by the over-exposure in image (a). (a) (b) Figure 6 Images acquired with the presented camera module without (a) and with (b) PROGLOG™ functionality 2.2 Top-level design considerations The top-level block diagram of the image sensor is shown in Figure 7 (a) and a photograph of the packaged image sensor in (b). Dictated by the minimum sensitivity of the innermost pixel, there is a minimum radius of the pixel field, the area in the center is not light sensitive. The maximum radius of the pixel field is 2.1 mm. This includes the 64 active pixel rows as well as one row of passive dummy pixels which is added for matching reasons. Considering the size of the light sensitive area, the pixel field is divided into four quadrants, using one read-out circuit (double-sampling stage DSS and analog-digital-converter ADC) per quadrant. This reduces the length of the wire connections between the pixels and the read-out circuits and minimizes the capacitive loads and signal delays. Having the four read-out circuits run in parallel relaxes the speed requirements in the DSS / ADC read-out block, making their design simpler, less power consuming and more robust. Every read-out circuit has 32 connections to the pixel field, one per column. During read-out the row decoder selects the appropriate row (signals row_sel and row_res in Figure 4) to be sampled and converted. (a) (b) Figure 7 (a) Top-level block diagram and (b) die photograph of the packaged image sensor In order to minimize the wire length (and thereby the signal delays), every DSS / ADC read-out block has assigned its own digital controller. The digitized 10 bit values of the ADC controllers are collected by the output controller, transmitting the data over a serial interface. The serial video data interface is not optimal from the image sensor point of view but its choice is dictated by system design aspects. A serial interface is preferred in order to minimize the pin count of the IC, allowing for smaller and more lightweight package. The image sensor also includes an SPI compatible programming interface. Through this interface, the frame rate, exposure time and various modes can be configured. The sensor controller acts as the main controller of the image sensor. 3. CAMERA MODULE A large variety of construction principles for panoramic cameras exists, using opto-mechanical, refractive and reflective elements. Given the constraints of an omni-directional, lightweight, small and robust camera for micro-robotics, a catadioptric system (combination of mirrors and lenses) is judged most practical. The mirror shape determines the quality, aberrations and distortions of the acquired images. In miniaturized optical systems, aspheric mirrors are the most difficult to fabricate but they offer the largest number of degrees of freedom with respect to low aberrations and field of view. The optical sub-system is described in more detail in [6]. The image sensor has radial-polar geometry and a pixel spacing that increases with the radius matched to the properties of the central catadioptric lens system. A schematic drawing of the camera module consisting of a catadioptric lens system and a dedicated image sensor is shown in Figure 8. The complete module including optics, microelectronics and electronics has been developed at CSEM„s Zurich center. The gold coated mirror reflects the incoming light through the image lens system which in turn focuses the image onto the image sensor. To adjust the focus of the lens onto the surface of the image sensor, the lens holder is equipped with a thread. For this reason, the lens holder and its counterpart are made of aluminum. The mirror as well as the lenses are made of polystyrol. Figure 8 Schematic drawing of the camera module with optical module and image sensor 4. APPLICATIONS 4.1 Distance measurement by means of triangulation Together with a laser on top of the helicopter, the camera developed at CSEM can be used for navigation and collision avoidance by means of triangulation. A wide horizontal field of view is mandatory as the helicopter needs to be able to determine the direction in which to turn when it encounters an obstacle. Employing a 360°-view camera module, the helicopter continuously observes its entire surroundings allowing it to find the safest route. The laser emits a 360° laser plane. Depending on the distance of an object to the camera, the reflection of this laser plane on the object is mapped to different locations on the image sensor as sketched in Figure 9. The reflection on a close object is mapped closer to the outer border of the pixel field whereas reflections on distant objects are mapped more to the center. A central processing unit on the helicopter reads the images from the camera, determines the shape of the reflected laser plane in the image and calculates the distances to the objects in the to be inspected environment. Figure 9 Principle drawing of the triangulation system The complete camera module as it is used on the muFly helicopter is depicted in Figure 10. It is mounted on the helicopter by four thin metal rails. The data transfer between the camera and the central DSP aboard the helicopter is accomplished by the two flat-band connections attached to the upper wing of the printed circuit board (PCB). The central DSP on the helicopter stores the images and uses them for navigation. Figure 10 Photograph of the camera module used on the muFly helicopter Figure 11 Error of distance measurement with a laser tilt angle of 6° and a distance from the laser to the camera of 12.7 cm Figure 11 shows the error in distance measurement versus the distance for a laser tilt angle of 6° and a distance from the laser to the camera of 12.7 cm. The increasing error that can be seen at far distances is a result of the fact that the width of the laser line is smaller than the height of the pixels. Due to the non-linear mapping of the object distance to the pixel location, the laser line illuminates the same pixel for a large range of distances. For closer distances, this effect is smaller because only a very short range of distances is mapped to the same pixel. For distance measurements by means of triangulation, the following three problems are identified: The vertical field of view is not optimized for triangulation. A smaller vertical field of view leads to a better geometrical resolution and therefore to a more accurate distance measurement. The power of the laser currently used is only sufficient for distance measurements up to two to three meters. This range can be increased by using a more powerful laser as well as by improving the sensitivity of the camera module. The lasers currently used generate a square-shaped line instead of a circular one. The reflection of this kind of laser line on a cylindrical surface leads to an error in the distance measurement as shown in Figure 12. (a) (b) Figure 12 (a) Illustration of the projection of a straight laser line on a cylindrical surface. (b) Resulting error in the distance measurement on the pixel field Because the system is designed for detecting the reflection of a laser line and the laser should consume as little power as possible, the relative quantum efficiency of the camera module is of interest (see Figure 13). The plot shows the measurements of two different camera modules. From the graph it can be observed that a wavelength of around 600 nm is desirable. To improve the detection of the laser line in the ambient light, optical band-pass filters can be inserted between the optical sub-system and the image sensor. Figure 13 Relative quantum efficiency of the camera module 4.2 Stereo vision Placing two of the camera modules upside down on top of each other, the total vertical field of view increases to -35° to +35°. The overlapping area of the fields of view allows stereo vision of the captured objects as sketched in Figure 14. With an object recognition algorithm processing the images from both cameras the distance to the objects can be calculated from the distance between the two cameras Dcam and the two object ray angles α1 and α2 according to the formula Dcam D cot 1 cot 2 The design of the optics determines the mapping between the vertical viewing angle and the row in the pixel field the object is seen on. Therefore, the viewing angles α1 and α2 in the above formula can be derived from the object‟s position in the two images by means of a simple look-up table storing this mapping. A stereo vision system using two omni-directional cameras has been presented in [7] and [8]. Using two of these ultraminiature cameras presented here, has the big advantage of a very compact and lightweight system. This allows the use of such stereo vision system in areas, where weight, power consumption and size are a major concern. Figure 14 Application of two omni-directional camera modules for stereo-vision 5. CONCLUSIONS Compared to existing omni-directional camera systems, the presented highly integrated camera platform is much smaller and much more lightweight. Thanks to the PROGLOG™ technology it has a dynamic range of over 140 dB, allowing its use in illumination conditions that are very challenging for conventional cameras. Due to the remapping into undistorted cylindrical coordinates by the optical pre-processing, the acquired image can be used directly, without any coordinate transformations. Delivering 40 images per second, this camera is well suited for fast moving robots. Thanks to its lightweight, compact and low-power design, the presented ultra-miniature camera module can be used in various other applications where omni-directionality, size and power consumption are a main concern. It is ideally suited for applications requiring instantaneous panoramic view including 3D-distance information. Possible application fields are: automotive, surveillance and security, robotics, navigation and collision avoidance. ACKNOWLEDGEMENT I thank the members of division P of CSEM‟s Zurich center for their support and contribution to the design of this camera module as well as Samir Bouabdallah and all the other members of the muFly consortium for the fruitful discussions. This publication has been supported by the 6th Framework Programme of the European Commission contract number FP6-IST-034120, Action line: Cognitive systems. The content of this document reflects only the author‟s views. The European Community is not liable for any use that may be made of the information contained therein. REFERENCES [1] [2] [3] [4] [5] [6] [7] [8] www.mufly.org G. Sandini, V. Tagliasco, “An Anthropomorphic Retina-like Structure for Scene Analysis,” Computer Graphics and Image Processing, vol. 14 No.3, pp. 365-372, 1980 Fernando Pardo, Bart Dierickx, and Danny Scheffer. “Space-Variant Nonorthogonal Structure CMOS Image Sensor Design”, IEEE Journal of solid state circuits, vol. 33, No. 6, pp. 842-849, 1998 Peter Seitz, Felix Lustenberger “Photo sensor with pinned photodiode and sub-linear response”, European Patent, Publication no. EP1845706 Yasushi Yagi, Shinjiro Kawato, Saburo Tsuji, “Real-Time Omnidirectional Image Sensor (COPIS) for VisionGuided Navigaion”, IEEE Transactions on robotics and automation, vol. 10, no.1, pp. 11-22, 1984 Christiane Gimkiewicz, Claus Urban, Edith Innerhofer, Pascal Ferrat, Simon Neukom, Guy Vanstraelen, Peter Seitz, “Ultra-miniature catadioptrical system for omnidirectional camera”, SPIE Photonics Europe conference, Paper 699218 Libor Spacek, “A catadioptric sensor with multiple viewpoints”, Robotics and Autonomous Systems, vol. 51, issue 1, Pages 3-15, 2005 Mark Fiala, Anup Basu, “Panoramic stereo reconstruction using non-SVP optics”, Computer Vision and Image Understanding, vol. 98 , issue 3, pp. 363 – 397, 2005

© Copyright 2025