Bibliometrin i biblioteket

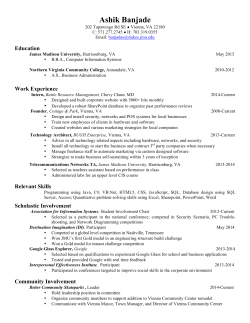

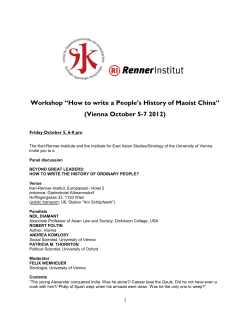

Measuring research quality in the humanities: evaluation systems, publication practices and possible effects Björn Hammarfelt bjorn.hammarfelt@hb.se Swedish School of Library and Information Science (SSLIS). University of Borås Visisting scholar at the Centre for Science and Technology Studies, Leiden University University of Vienna, 7 May, 2015 Outline • Introduction • The organization of research in the humanities • How to measure social impact in the humanities • The possible consequences of bibliometric evaluation • Challenge: How to handle demands for accountablity? University of Vienna, 7 May, 2015 Organization Diverse audience Hicks (2006) four types of literatures: - journal articles - books - national literature - non-scholarly literature Nederhof (2006) three audiences: - international scholars - researchers on the national or regional level - non-scholarly audience University of Vienna, 7 May, 2015 Organization Intellectual organisation Low dependence on colleagues (Whitley, 1984) - fragmentation - interdisciplinarity High degree of task uncertainty (Whitley, 1984) Rural (Becher & Trowler, 2001) - slow pace - little pressure to publish results fast - less competetion/ lower rewards - greater freedom (still?) University of Vienna, 7 May, 2015 Organization Argument 1: - Intellectual organization influences referencing patterns (Hellqvist, 2011) Argument 2: - Intellectual organization of the humantities makes it harder to come up with systematic measures of quality Argument 3: - Systematic, strong evaluation systems, have greater effects in fields characterized by high task uncertainty and low dependence on colleges (Whitley 2007) University of Vienna, 7 May, 2015 Social impact Impact: ”The action of one object coming forcibly into contact with another” OED What is (not) social impact for the humanities? “Rather than the humanities being pre-scientific, it is the natural sciences which until very recently have been presocial.” (Gibbons et al., 1994, p. 99) University of Vienna, 7 May, 2015 Social impact Altmetrics http://www.altmetric.com/top100/2014/ University of Vienna, 7 May, 2015 Social impact Altmetrics Pro Con Possibility to measure the In reality restricted to journal impact of a range of different articles (mostly in English) dissemination forms: articles, with DOI chapters, books, webbpages, blogs… Quality of data a major concern No ‟citation delay‟ Impact not restricted to a scholarly audience Doubts of whats really measured: quality, popularity or mere advertisment? Hammarfelt (2014) University of Vienna, 7 May, 2015 Altmetrics University of Vienna, 7 May, 2015 Social impact Impact case study More in line with the epistemology of the humanities Provides, in-depth and thick descriptions that might also facilitate reflection Requires a lot of work both for evaluee and evaluator University of Vienna, 7 May, 2015 REF impact case studies: http://impact.ref.ac.uk/CaseStud rch1.aspx University of Vienna, 7 May, 2015 REF impact case studies: http://impact.ref.ac.uk/CaseStud rch1.aspx University of Vienna, 7 May, 2015 Evaluation in context Publication practices and evaluation systems - Faculty of Arts, Uppsala University Bibliometric data on publication patterns Survey of publication practices/attitudes Moments of metric, 2009 (national - citations) 2011 (local – Norwegian model) Hammarfelt & de Rijcke (2015) University of Vienna, 7 May, 2015 Evaluation in context As everybody knows, and as all answers will confirm, we are moving from publishing monographs to publishing articles, and this is not always beneficial for the humanities [Established historian, R68] University of Vienna, 7 May, 2015 Evaluation in context University of Vienna, 7 May, 2015 Evaluation in context Never throw away good ideas or research in book chapters. Everything that requires work should be published in international peer reviewed journals, otherwise it’s a waste of time (both for authors and readers) . . . (Novice historian, R83) University of Vienna, 7 May, 2015 Evaluation in context Internal and external demands It‟s a problem that the status of monographs is very uneven - they definitely count as an advantage in my field, but not in funding and general academia. Thus, I have focused on writing articles to be on the safe side [. . .] [Novice literary scholar, R71] University of Vienna, 7 May, 2015 Evaluation in context Publication strategies More and more scholars adapt to new publication strategies to have any chance in the tough competition for permanent positions within the field [Novice literary scholar, R89] Young researchers appear to be more inclined to adapt to the pressures of financiers and models for allocation of resources. This is understandable but regrettable. [Established historian, R20] University of Vienna, 7 May, 2015 Evaluation in context Publication strategies 1. Highly regarded within the discipline 2. Peer reviewed 3. Quality of peer review 4. International reach/visibility 5. Suggestions from coauthors/colleagues 6. Demands from funding agency 7. Counts in evaluation schemes 8. Speed of publication 9. Open Access 10. Indexed in international databases (WoS, Scopus) University of Vienna, 7 May, 2015 Challenge How to handle demands for accountablity and transparency? - Protest - Ignore - Play along - Game the system - Engage in the construction of the system University of Vienna, 7 May, 2015 Aagaard, K. (2015) „How incentives trickle down: Local use of a national bibliometric indicator system‟, Science and Public Policy, pp. 1-13. Literature Becher, Tony & Trowler Paul R. (2001). Academic tribes and territories: Intellectual enquiry and the cultures of disciplines. Buckingham, UK: Open University Press. Dahler-Larsen, P. (2012) The Evaluation Society. Stanford: Stanford University Press. Gibbons, M., Limoges, C., Nowotny, H., Schwartzman, S., Scott, Peter & Trow, M. (1994). The new production of knowledge: The dynamics of science and research in contemporary societies. London: SAGE Publications. Hammarfelt, B. and de Rijcke, S. (2015) Accountability in context: Effects of research evaluation systems on publication practices, disciplinary norms and individual working routines in the faculty of Arts at Uppsala University‟, Research Evaluation, 24/1: 63-77. Hammarfelt, B. (2014). Using altmetrics for assessing research impact in the humanities. Scientometrics, 101(2), 1419-1430. Hellqvist (2010). Referencing in the humanities and its implications for citation analysis. JASIST, 61(2), 310-318. Hicks, D. (2004). The four literatures of social science. In Moed, Henk F. et al. (Eds.) Handbook of quantitative science and technology research. (pp. 473-496). Dordrecht: Kluwer Academic Publishers. Nederhof, A.J.. (2006). Bibliometric monitoring of research performance in the social sciences and the humanities: A review. Scientometrics, 66(1), 81-100. Whitley, R. (2000) The intellectual and social organization of the sciences. (Second Edition). Oxford: Oxford University Press. Vielen Dank für Ihre Aufmerksamkeit!

© Copyright 2025