Lec 01: Feedback Control - Distributed Control of Robotic Networks

CONTENTS

1

INTRODUCTION

Lecture 1: Feedback control

Jorge Cort´es

March 30, 2015

Abstract

The treatment corresponds to selected parts from Chapter 6 in [1] and Chapter 12 in [2].

Contents

1 Introduction

1

1.1

Stabilization with respect to an arbitrary point . . . . . . . . . . . . . . . . . . . . . . . . . .

2

1.2

Regional, global and semiglobal stabilization . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2

1.3

Tracking in the presence of disturbance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

1.4

Tidbit: plotting phase portraits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3

2 Stabilization by state feedback control

4

3 Stabilization by output feedback control

8

4 Integral control

4.1

1

10

Integral control via linearization: state feedback integral controller . . . . . . . . . . . . . . .

12

Introduction

Consider the system on Rn ,

x˙ = f (t, x, u)

(1)

The state feedback stabilization problem for the system (1) is the problem of designing a feedback control

law u = γ(t, x) such that the origin is a uniformly asymptotically stable equilibrium point of the closed-loop

system

x˙ = f (t, x, γ(t, x))

The control law u = γ(t, x) is called static feedback because it is a memoryless function of x. One can also

use a dynamic feedback control

u = γ(t, x, z)

1

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

1.1

Stabilization with respect to an arbitrary point

1

INTRODUCTION

where z is the solution of a dynamical system driven by x, that is,

z˙ = g(t, x, z)

Common examples of dynamic state feedback include integral control and adaptive control. In the case of

dynamic feedback control, the origin to be stabilized is x = 0, z = 0.

Consider the system

x˙ = f (t, x, u)

y = h(t, x, u)

(2a)

(2b)

The output feedback stabilization problem for the system (1) is the problem of designing a static output

feedback control law u = γ(t, y) or a dynamic output feedback control law u = γ(t, y, z), z˙ = g(t, y, z), such

that the origin is a uniformly asymptotically stable equilibrium point of the closed-loop system. In the case

of dynamic feedback control, the origin to be stabilized is x = 0, z = 0.

Dynamic controllers are more common in output feedback schemes, since the lack of measurement of some

state variables makes it useful to introduce “observer”-like components.

1.1

Stabilization with respect to an arbitrary point

Given a desired x∗ ∈ Rn , assume there exists a value of the input u∗ such that f (t, x∗ , u∗ ) = 0, for all t ≥ 0

(i.e., u∗ makes the state x∗ an equilibrium). The change of variables

x

¯ = x − x∗ ,

u

¯ = u − u∗ ,

results in

x

¯˙ = f (t, x∗ + x

¯ , u∗ + u

¯) = f¯(t, x

¯, u

¯)

with f¯(t, 0, 0) = 0 for all t ≥ 0. Therefore, there is no loss of generality in reasoning for the origin as

equilibrium. For output feedback problems, the output is redefined as

¯ x

y¯ = h(t, x∗ + x

¯ , u∗ + u

¯) − h(t, x

¯, u

¯) = h(t,

¯, u

¯).

1.2

Regional, global and semiglobal stabilization

If the feedback control guarantees that a certain set is included in the region of attraction or if an estimate

of the region of attraction is given, we say that the feedback control achieves regional stabilization.

If the origin of the closed-loop system is globally asymptotically stable, we say that the control achieves global

stabilization.

If the feedback control does not achieve global stabilization, but can be designed such that any given compact

set (no matter how large) can be included in the region of attraction, we say that the feedback control achieves

semiglobal stabilization.

Example 1.1 Consider the scalar system

x˙ = x2 + u

Linearization around the origin results in x˙ = u. This can be stabilized with u = −kx, k > 0. This results in

x˙ = −kx + x2

2

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

1.3

Tracking in the presence of disturbance

1

INTRODUCTION

Therefore, u = −kx achieves local stabilization. In this example, one can see that the region of attraction

is {x ∈ R | x < k}. Hence, u = −kx achieves regional stabilization. Given any compact set, we can choose

r > 0 such that the set is included in the ball B(0, r). This ball can be included in the region of attraction by

choosing k > r. Therefore, u = −kx achieves semiglobal stabilization. However, it does not achieve global

stabilization, no matter which k we use. Global stabilization is achieved with the control law u = −x2 − kx.•

1.3

Tracking in the presence of disturbance

Consider the system

x˙ = f (t, x, u, w)

(3a)

y = h(t, x, u, w)

(3b)

The goal is to design the control input u so that the controlled output y tracks a reference signal r, that is

e(t) = y(t) − r(t) ≃ 0,

t ≥ t0 ,

where t0 is the time when the control starts.

Note that the initial value of y(t0 ) depends on the initial state x(t0 ). Therefore, meeting this requirement for

all t ≥ t0 will require either pre-setting x(t0 ) or pre-setting the initial value of the reference signal by assuming

knowledge of x(t0 ). Therefore, we usually seek an asymptotic output tracking or asymptotic regulation goal,

i.e.,

e(t) → 0,

t → ∞.

If asymptotic output tracking is achieved in the presence of input disturbance w, we say that asymptotic

disturbance rejection has been achieved.

For a general time-varying disturbance input t 7→ w(t) it might not be possible to achieve asymptotic

disturbance rejection. In such cases, we attempt to achieve disturbance attenuation, i.e., something like

ke(t)k ≤ ε,

t ≥ t0 .

Alternatively, we may consider attenuating the closed-loop input-output map from the disturbance input w

to the tracking error e.

Feedback control laws for the tracking problem are characterized in the same way as for the stabilization

problem. The only note to this is that the definitions refer not only to the size of the initial state x, but also

to the size of the exogenous signals r and w.

1.4

Tidbit: plotting phase portraits

Both Mathematica and Matlab have commands or routines that you can invoke to plot phase portraits. These

are often useful to get a rough idea of the behavior of planar systems, or to disprove/confirm/contradict some

intuition about the solutions of the system.

In Mathematica, you can use VectorPlot in 2D and, for those with really good spatial vision, VectorPlot3D

in 3D. In Matlab, you can use pplane.m and dfield.m provided by Prof. Polking from Rice University at

http://math.rice.edu/~dfield.

3

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

2 STABILIZATION BY STATE FEEDBACK CONTROL

2

Stabilization by state feedback control

Consider the nonlinear control system

x˙ = f (x, u)

where f (0, 0) = 0 and f is continuously differentiable in a domain Dx × Du ⊂ Rd × Rp that contains (0, 0).

Our objective is to design a state feedback control law u = γ(x) that stabilizes the origin x = 0.

Linearization of the system around x = 0, u = 0 yields

x˙ = Ax + Bu,

A=

∂f

(x, u)x=0,u=0 ,

∂x

B=

∂f

(x, u)x=0,u=0 .

∂u

Assume that a matrix K exists such that A − BK has all its eigenvalues in the left half complex plane (more

on this in the future, in general this is not true). Consider then the control law u = −Kx. The closed-loop

nonlinear system looks now as

x˙ = f (x, −Kx).

Clearly the origin is an equilibrium point of the system. Moreover, the linearization around the origin is

∂f

∂f

(x, −Kx) +

(x, −Kx)(−K)

x = (A − BK)x

y˙ =

∂x

∂u

x=0

Since A − BK is Hurwitz, it follows from the Lyapunov indirect method (see [2, Theorem 4.7]) that the

origin is an asymptotically stable equilibrium point of the closed-loop system. Actually, according to [2,

Theorem 4.13], the origin is exponentially stable. As a byproduct of this approach, we can always find a

Lyapunov function for the closed-loop system. This, for instance, can serve to determine an estimate of the

region of attraction. Given any positive definite symmetric matrix Q, solve the Lyapunov equation

P (A − BK) + (A − BK)t P = −Q

for P . We know that there exists a unique solution to this equation because A − BK is Hurwitz [2, Theorem 4.6]. Once solved, we define the quadratic Lyapunov function V (x) = xt P x.

Example 2.1 (Forced pendulum) Consider the pendulum equations

θ¨ = −a sin θ − bθ˙ + cT

(4)

where a = g/l > 0, b = k/m ≥ 0, c = 1/ml2 > 0, θ is the angle subtended by the rod and the vertical

axis, and T is the torque applied to the pendulum. Suppose we want to stabilize the pendulum at an angle

θ = θdes . First of all, we need to apply a torque Tdes to make θdes an equilibrium,

−a sin θdes + cTdes = 0.

˙ and the control variable u = T − Tdes . The

Let us choose the state variables x1 = θ − θdes and x2 = θ,

equations read now

x˙ 1 = x2

x˙ 2 = −a(sin(x1 + θdes ) − sin(θdes )) − bx2 + cu

Note that f (0, 0) = 0. The linearization at the origin results in

0

1

0

A=

, B=

−a cos θdes −b

c

4

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

2 STABILIZATION BY STATE FEEDBACK CONTROL

Taking u = K[x1 , x2 ]T , with K = [k1 , k2 ], one can see

0

A − BK =

−a cos θdes − ck1

1

−b − ck2

with eigenvalues

λ=

−(ck2 + b) ±

p

(ck2 + b)2 − 4(a cos θdes + ck1 )

.

2

Therefore, A − BK is Hurwitz so long as k1 > − ac cos θdes and k2 > − cb . The torque is then given by

T =

a

a

˙

sin θdes − Kx = sin θdes − k1 (θ − θdes ) − k2 θ.

c

c

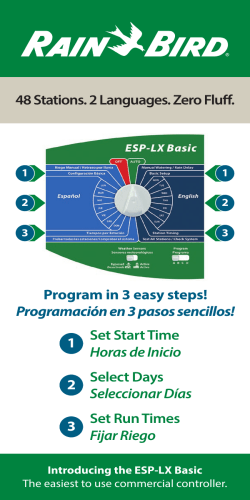

See Figure 1 for an illustration of this control.

•

x’=y

y ’ = − a (sin(x) − sin(tdesired)) − k1 c (x − tdesired) − (b + k2 c) y

a=1

k2 = 1

k1 = 1

c=1

b=1

tdesired = pi/2

4

3

2

y

1

0

−1

−2

−3

−4

−2

−1

0

1

x

2

3

4

Figure 1: Phase portrait of the pendulum example exponentially stabilized around the point θdes = π/2. The

values of the parameters are a = b = c = 1 and k1 = k2 = 1.

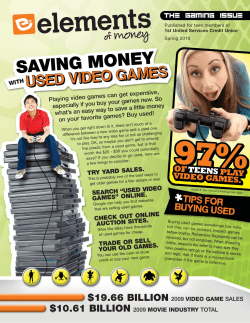

Example 2.2 (Tracking with the Robobrain robot) Consider the Robobrain robot in Figure 2. Its evolution can be modeled by the following unicycle model

x˙ = u cos θ,

y˙ = u sin θ,

θ˙ = v,

where u corresponds to forward velocity, and v corresponds to turning velocity. Assume our objective is to

make the robot track a vertical trajectory x = x0 (and therefore, θ = π/2).

5

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

2 STABILIZATION BY STATE FEEDBACK CONTROL

Figure 2: Robobrain robot

Let us make the change of coordinates x

¯ = x − x0 , y¯ = y, θ¯ = θ − π/2 to obtain

¯

x

¯˙ = u cos(θ¯ + π/2) = −u sin θ,

¯

¯

y¯˙ = u sin(θ + π/2) = u cos θ,

θ¯˙ = v.

Our objective is now to drive the system toward the line (0, y¯, 0). Therefore, we choose a proportional

¯ This choice yields the system

controller of the form u = uc > 0, v = −k1 x

¯ − k2 θ.

x

¯˙ = −uc sin θ¯

y¯˙ = uc cos θ¯

θ¯˙ = −k1 x

¯ − k2 θ¯

The linearization of the system at (0, y¯, 0) is

x

¯˙ = −uc θ¯

y¯˙ = uc

θ¯˙ = −k1 x

¯ − k2 θ¯

The sub matrix corresponding to x

¯ and θ¯ is

0

−k1

−uc

−k2

with eigenvalues

λ=

−k2 ±

p

k22 + 4uc k1

.

2

Therefore, for k2 > 0 and k1 < 0, we deduce that the feedback controller

u = uc ,

v = −k1 (x − x0 ) − k2 (θ −

π

).

2

achieves the tracking of the desired trajectory. Figure 3 shows the resulting (x, θ)-phase portrait.

6

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

•

2 STABILIZATION BY STATE FEEDBACK CONTROL

uc = .5

x0 = 1

k1 = − 1 k2 = 1

x ’ = uc cos(theta)

theta ’ = − k1 (x − x0) − k2 (theta − pi/2)

3

2.5

2

1.5

theta

1

0.5

0

−0.5

−1

−1.5

−2

−1

−0.5

0

0.5

1

x

1.5

2

2.5

3

Figure 3: (x, θ)-phase portrait of the Robobrain example. The values of the parameters are x0 = 1, uc = .5,

k1 = −1 and k2 = 1.

Exercise 2.3 Carry over a discussion similar to the one above for the stabilization of a nonlinear system by

dynamic state feedback control.

Example 2.4 (Estimating the region of attraction) Given a positive definite symmetric matrix of your

choosing, e.g., Q = I, solve the Lyapunov equation

P (A − BK) + (A − BK)t P = −Q

for P . We know that there exists a unique solution to this equation because A − BK is Hurwitz [2, Theorem 4.6]. Define then quadratic Lyapunov function V (x) = xt P x. By closely following the proof of the

Lyapunov indirect method [2, Theorem 4.7], one can find an estimate of the region of attraction. The idea

is as follows. Write x˙ = Ax + g(x), with g(x) = f (x, −Kx) − Ax. Note that kg(x)k/kxk → 0, and hence,

for any γ > 0, there exists r such that kg(x)k ≤ γkxk for kxk ≤ r. Now, the evolution of the quadratic

function V along the dynamical system x˙ = f (x, −Kx) is given by

Lf V (x) = xt P (A − BK)x + xt (A − BK)t P x + 2xt P g(x)

= −xt Qx + 2xt P g(x)

Now, take γ < λmin (Q)/(2kP k). For kxk ≤ r, x 6= 0, we have

Lf V (x) ≤ −λmin (Q)kxk2 + 2γkP kkxk2 < 0.

Therefore, on B(0, r) \ {0}, the function V is strictly decreasing. Now, the last step is to determine a value

c such that the sublevel set Ωc of V is contained in this ball.

•

Remark 2.5 (Determining the appropriate gains) Computing conditions on K such that A − BK is

Hurwitz might get computationally intensive. The direct method is to find the eigenvalues of the matrix and

7

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

3 STABILIZATION BY OUTPUT FEEDBACK CONTROL

determine suitable conditions on the gains in K. Alternative approaches include the Routh criterion and

Gersgorin disk theorem. This latter result states that the eigenvalues of a n × n matrix F are contained in

[

i∈{1,...,n}

3

n

z ∈ C kz − fii kC ≤

n

X

j=1,j6=i

o

|fij | .

•

Stabilization by output feedback control

Consider the system

x˙ = f (x, u)

y = h(x)

where f (0, 0) = 0, h(0) = 0 and f , h are continuously differentiable in a domain Dx × Du ⊂ Rn × Rp that

contains (0, 0). Our objective is to design an output feedback law to stabilize the system.

Linearization of the system around x = 0, u = 0 yields

∂f

(x, u)x=0,u=0 ,

∂x

∂h (x) x=0

y = Cx, C =

∂x

We try a dynamic output feedback controller

x˙ = Ax + Bu,

A=

B=

∂f

(x, u)x=0,u=0

∂u

z˙ = F z + Gy

u = Lz + M y

such that the closed-loop matrix (in the variables (x, z))

A + BM C

GC

BL

F

is Hurwitz. This is not always possible. When (A, B) is stabilizable and (A, C) is detectable, an example of

such design is the observer-based controller

z=x

ˆ,

F = A − BK − HC,

G = H,

L = −K,

M = 0,

with K and H such that A − BK and A − HC are Hurwitz. In this case, we have the closed-loop matrix

A

−BK

(5)

HC A − BK − HC

whose eigenvalues are exactly those of A − BK and A − HC.

Exercise 3.1 Prove that the eigenvalues of the matrix (5) are the eigenvalues of A − BK and A − HC.

[Hint: play with the eigenvectors]

When the controller is applied to the nonlinear system, it results in the system

x˙ = f (x, Lz + M h(x))

z˙ = F z + Gh(x)

The origin x = 0, z = 0 is an equilibrium point of the system, and the linearization has an associated Hurwitz

matrix. Therefore, the origin is exponentially stable. A Lyapunov function for the closed-loop system can be

obtained by solving a Lyapunov equation for the Hurwitz matrix.

8

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

3 STABILIZATION BY OUTPUT FEEDBACK CONTROL

Example 3.2 (Forced pendulum) Consider the pendulum equations

θ¨ = −a sin θ − bθ˙ + cT

where a = g/l > 0, b = k/m ≥ 0, c = 1/ml2 > 0, θ is the angle subtended by the rod and the vertical

axis, and T is the torque applied to the pendulum. Suppose we want to stabilize the pendulum at an angle

θ = θdes . Let us choose the state variables x1 = θ −θdes and x2 = θ˙ and the control variable u = T − ac sin θdes .

˙ i.e.,

Additionally, suppose we can measure the angle θ, but not the velocity θ,

y = x1 = θ − θdes .

The equations read now

x˙ 1 = x2

x˙ 2 = −a(sin(x1 + θdes ) − sin θdes ) − bx2 + cu

y = x1

The matrices A, B, C are given by

A=

1

,

−b

0

−a cos θdes

B=

0

,

c

C= 1 0

We implement the dynamic output feedback controller using the observer

x

ˆ˙ = Aˆ

x + Bu + H(y − x

ˆ1 )

Taking H = [h1 , h2 ]T , it can be verified that A − HC is Hurwitz if

h1 + b > 0,

h1 b + h2 + a cos θdes > 0.

1

−b − ck2

Now, let K = [k1 , k2 ] and consider

0

A − BK =

−a cos θdes − ck1

with eigenvalues

λ=

−(ck2 + b) ±

p

(ck2 + b)2 − 4(a cos θdes + k1 )

.

2

From here, we deduce that A − BK is Hurwitz so long as

a

k1 > − cos θdes ,

c

b

k2 > − .

c

The torque is then given by

T =

a

sin θdes − K x

ˆ.

c

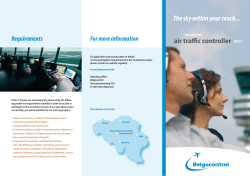

Figure 4 gives an example of the implementation of the controller.

9

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

•

4

theta versus time

INTEGRAL CONTROL

theta - thetaDesired, z1 versus time

1.4

0.4

1.2

0.2

1

0

0.8

0.6

-0.2

0.4

-0.4

0.2

-0.6

2

4

6

8

10

2

4

6

8

10

Figure 4: Implementation of output feedback controller in the forced pendulum example. The parameters

are θdes = π/4, k1 = k2 = h1 = h2 = 1, a = c = 10, b = 1.

4

Integral control

Remember our discussion to design a state feedback controller for the pendulum in Example 2.1. We came

up with the torque

T =

a

a

˙

sin θdes − Kx = sin θdes − k1 (θ − θdes ) − k2 θ.

c

c

The feedback part of the controller can be designed to be robust to a wide range of parameter perturbations.

For instance, if we know a/c ≤ ρ, but do not know the exact value of a and c, we can ensure that A − BK

is Hurwitz by choosing

k1 > ρ,

k2 > 0.

However, the calculation of the steady-state component is quite sensitive to parameter perturbations. Suppose

that we compute it using the nominal values a0 , c0 for a, c, respectively. Then we will apply the torque

T =

a0

sin θdes − k1 (θ − θdes ) − k2 θ˙

c0

in the pendulum equation (4). A little bit of math gives the steady-state of the closed-loop system as the

value θss that verifies

a

0

sin θdes − k1 (θss − θdes ) .

a sin θss = c

c0

For θdes = 0 or θdes = π (the original equilibrium of the system), we deduce θss = θdes . So in this case, the

steady-state component is robust to parameter perturbations (can you explain why?). However, for other

values, this is no longer true. For instance, for θdes = 45 degrees, a = a0 , c = c0 /2 and k1 = 3a0 /c0 , we have

θss = 36 degrees.

Example 4.1 (Cruise control) Consider the following simple dynamical model of the evolution of the

velocity v of your preferred car

v˙ =

1

− bv + u + w .

m

10

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

4

INTEGRAL CONTROL

Here m > 0 stands for the mass of the car, and b > 0 is a friction coefficient. The control u stands for the

engine power. Finally, w accounts for the unknown hill inclination. The cruise controller of your preferred

car certainly does not know about the exact incline of the road at any given instant in time! Assume you set

your desired speed to be v = vdes . Let us then choose the controller

u = bv − k(v − vdes ).

The first term takes care of making vdes an equilibrium. We select the other one to make it stable. In the

absence of external disturbances (i.e., w = 0, flat road), we then have

v˙ = −

k

(v − vdes ).

m

So long as k > 0, we have exponentially stabilized the system. However, what happens if the road incline is

not zero? Then, the real system will really look like

v˙ =

1

(−k(v − vdes ) + w).

m

and the equilibrium will be

vss = vdes +

w

k

The bigger the gain k, the smaller the deviation from the desired velocity. However, your preferred car horse

power is limited, and hence you cannot keep cranking up the engine! It is actually better to use integral

control, as we demonstrate next.

•

In this section, we present an integral control approach that takes care of fixing the mismatch problem at

steady state. The idea is to reformulate our objective as an asymptotic regulation problem as defined in

Section 1.3. We solve the asymptotic regulation under all parameter perturbations that do not destroy the

stability of the closed-loop system. Consider the system

x˙ = f (x, u, w)

y = h(x, w)

where w ∈ Rl is a vector of unknown constant parameters and disturbances. The functions f : Rn × Rp ×

Rl → Rn and h : Rn × Rl → Rp are continuously differentiable in (x, u) and continuous in w in a domain

Dx × Du × Dw ⊂ Rn × Rp × Rl . Let r ∈ Dr ⊂ Rp be a constant reference that is available online. Our

objective is to design a feedback controller such that

y(t) → r,

Rephrasing the control problem.

equilibrium point where y = r. Let

t → ∞.

This regulation task will be achieved by stabilizing the system at an

r

v=

∈ Dv = Dr × Dw

w

Assume that for each v ∈ Dv , there exists a unique pair xss , uss that depends continuously on v and satisfies

the equations

0 = f (xss , uss , w)

r = h(xss , w)

(6a)

(6b)

11

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

4.1

Integral control via linearization: state feedback integral controller

4

INTEGRAL CONTROL

To introduce integral action, we set e = y − r and integrate this regulation error,

σ˙ = y − r

Together with the state equation, we then have

x˙ = f (x, u, w)

σ˙ = h(x, w) − r

(7a)

(7b)

The control task is now to design a feedback controller that stabilizes this system at an equilibrium point

(xss , σss ), where σss produces the desired uss . See Figure 5.

−σ

r

u

y

+

−

Figure 5: Integral control.

The fact that this controller is robust to all parameter perturbations that do not destroy the stability of the

closed-loop system can be explained as follows. The inclusion of the integrator causes the regulation error to

be zero at equilibrium. Parameter perturbations will change the equilibrium point, but the condition e = 0

will still be maintained. Therefore, as long as the perturbed equilibrium point remains asymptotically stable,

regulation will be achieved.

4.1

Integral control via linearization: state feedback integral controller

Let us devise a local solution to the regulation problem via linearization. We consider state feedback. Our

objective is to design a feedback controller u = γ(x, σ) to stabilize the system (7) at (xss , σss ), where

uss = γ(xss , σss ). We consider a linear control law of the form

u = −K1 x − K2 σ

(8)

Substituting into the equations of the system, we get

x˙ = f (x, −K1 x − K2 σ, w)

σ˙ = h(x, w) − r

(9a)

(9b)

whose equilibrium points satisfy

0 = f (¯

x, u

¯, w)

0 = h(¯

x, w) − r

u

¯ = −K1 x

¯ − K2 σ

¯

By assumption (6), we deduce that x

¯ = xss and u

¯ = uss . By choosing K2 invertible, we guarantee that there

is a unique solution σss of the equation uss = −K1 xss − K2 σss .

12

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

4.1

Integral control via linearization: state feedback integral controller

4

INTEGRAL CONTROL

We now have to stabilize (xss , σss ). Let ξ = [x − xss , σ − σss ]T . Linearization of (9) yields

ξ˙ = (A − BK)ξ

where

A 0

B

A=

, B=

, K = K1 K2 ,

C 0

0

∂f ∂h ∂f , B=

, C=

A=

∂x x=xss ,u=uss

∂u x=xss ,u=uss

∂x x=xss

(10)

Under appropriate conditions on A, B, C, one can design K such that A − BK is Hurwitz for all v ∈ Dv . For

any such design, K2 will necessarily be invertible. With this controller, (xss , σss ) is an exponentially stable

equilibrium point. One can also add a term of the form K3 e to the control law u in (8) in order to improve

performance.

Example 4.2 (Cruise control revisited) Consider the cruise control equations

v˙ =

1

− bv + u + w .

m

Let us design an integral control to stabilize the system to a desired velocity vdes no matter what unknown

road incline our car faces. We choose the state variable x = v − vdes , and the output function y = x (i.e.,

we can measure the difference between the actual and the intended velocities). The unknown disturbance is

just w ∈ R. Our equations then look like

1

− b(x + vdes ) + u + w

m

σ˙ = x − r

x˙ =

Clearly, given (r, w), there exist a unique pair (xss , uss ) such that

1

− b(xss + vdes ) + uss + w

m

0 = xss − r

0=

Actually, solving these equations, we get

xss = r,

uss = −w + b(xss + vdes ).

We aim for an integral control of the form

u = −k1 x − k2 σ.

We should choose σss in such a way that it produces uss , i.e., such that uss = −k1 xss − k2 σss . From this,

we deduce that

σss =

1

1

(−uss + k1 xss ) = (w − b(r + vdes ) + k1 r)

k2

k2

We want therefore to stabilize (xss , σss ). Note that our reference is r = 0 (so that the output y = x

asymptotically converges to 0). With the same notation as above, we have

ξ˙ = (A − BK)ξ

13

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

4.1

Integral control via linearization: state feedback integral controller

4

INTEGRAL CONTROL

where

ξ=

x − xss

,

σ − σss

A=

b

−m

1

0

,

0

B=

1

m

0

K = k1

,

k2

Therefore, we have

A − BK =

b

−

−m

1

k1

m

− km2

0

with eigenvalues

λ=

−(b + k1 ) ±

p

(b + k1 )2 − 4k2 m

.

2m

Therefore, choosing k2 > 0 and k1 > −b guarantees that (xss , σss ) is an exponentially stable equilibrium

point. In the original variables, the control reads

u = −k1 (v − vdes ) − k2 σ,

σ˙ = v − vdes .

Note the difference with respect to the one that we computed previously,

u = bv − k(v − vdes ).

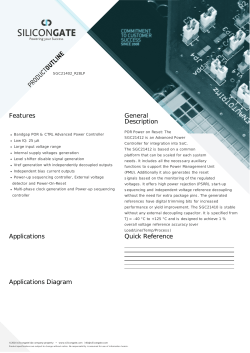

Figure 6 gives a comparison of the performance of both controllers.

velocity versus time

velocity versus time

1.2

1.2

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

2

4

6

•

8

10

2

4

6

8

10

Figure 6: Comparison of the performance of the feedback controllers without (left) and with integral action

(right). The desired velocity is vdes = 1. The solid lines represent the evolution under perfect information

of the friction coefficient b = 1 and the road incline w = 0. The dashed lines represent the evolution under

slight perturbations of these parameters (b = .9, w = .1). The control gains are k1 = 2, k2 = 1.

Example 4.3 (Forced pendulum) Consider the pendulum equations

θ¨ = −a sin θ − bθ˙ + cT

where a = g/l > 0, b = k/m ≥ 0, c = 1/ml2 > 0, θ is the angle subtended by the rod and the vertical axis,

and T is the torque applied to the pendulum. Suppose we want to regulate θ to θdes . Let us choose the state

˙ the control variable T and y = x1 . The equations read now

variables x1 = θ − θdes and x2 = θ,

x˙ 1 = x2

x˙ 2 = −a sin(x1 + θdes ) − bx2 + cT

y = x1

14

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

REFERENCES

REFERENCES

Note that our reference is r = 0 (so that the output y = x1 asymptotically converges to 0). From (6), we get

the equilibrium point xss = (0, 0), with associated torque Tss = ac sin(θdes ). We design an integral controller

of the form

T = −k1 x1 − k2 x2 − k3 σ.

Note that σss = − k13 Tss .

The matrices A, B, C in equation (10) are given by

0

1

A=

,

−a cos θdes −b

B=

0

,

c

C= 1 0

One can deduce that the matrix

0

A − BK = −a cos θdes − ck1

1

1

−b − ck2

0

0

−ck3

0

is Hurwitz if

b + k2 c > 0,

(b + k2 c)(a cos θdes + k1 c) > k3 c,

k3 c > 0.

Suppose that we do not know the values of the parameters a, b, c, but we have upper bounds a/c ≤ ρ1 and

1/c ≤ ρ2 . Then, the choice

k2 > 0,

k1 > ρ1 +

k3

ρ2 > 0,

k2

k3 > 0

ensures that A − BK is Hurwitz. The feedback control law is given by

T = −k1 (θ − θdes ) − k2 θ˙ − k3 σ

σ˙ = θ − θdes

which is the classical PID (Proportional Integral Derivative) controller. Note the difference with regards to

the one that we computed previously

T =

a

˙

sin θdes − k1 (θ − θdes ) − k2 θ.

c

Stabilization with the PID controller will be achieved under parameter perturbations that satisfy (b +

•

k2 c)(a cos θdes + k1 c) > 0. Figure 7 gives a comparison of the performance of both controllers.

The case of output feedback is similar and slightly more involved, but the design rationale is the same, see [2,

pp. 484-485].

References

[1] S. S. Sastry, Nonlinear Systems: Analysis, Stability and Control, ser. Interdisciplinary Applied Mathematics.

Springer, 1999, no. 10.

[2] H. K. Khalil, Nonlinear Systems, 3rd ed.

Prentice Hall, 2002.

15

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

REFERENCES

REFERENCES

theta versus time

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

0.2

0.5

1

1.5

2

2.5

theta versus time

1

3

3.5

4

0.5

1

1.5

2

2.5

3

3.5

4

Figure 7: Comparison of the performance of the feedback controllers without (left) and with integral action

(right). The desired angle is θdes = π/4. The solid lines correspond to the evolution of a system with a = 10,

b = 1 and c = 10, and perfect information on these parameters. The dashed lines correspond to the evolution

of a system with a = 10, b = .5 and c = 5, but with the non-integral controller designed for the system with

values a = 10, b = 1 and c = 10.

16

MAE281b – Nonlinear Control

c 2008-2015 by Jorge Cort´

Copyright es. Permission is granted to copy, distribute and modify this file, provided that the

original source is acknowledged.

© Copyright 2025