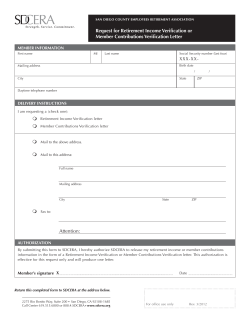

Document 208185